The recommendation algorithms promise marketers to achieve their Holy Grail: that of personalised relationships with all their customers at a reasonable price. Since the quality of customer relations is a decisive factor for customer satisfaction and loyalty, algorithmic recommendations, therefore, represent the promise of quality interactions with customers and meaningful marketing actions.

However, large-scale customisation can only be achieved by using customer data and the digital traces they leave behind. In a world where privacy awareness is growing (GDPR), and the legal framework is strengthening (e-privacy), how can we balance the needs of customers seeking a clear understanding with those of companies seeking economies of scale?

I would like you to consider this question in today’s article. This article will be used as a basis for my presentation at the BAM Congress on 5 and 6 December 2019 in Brussels (see presentation below).

Summary

- Large-scale personalisation is an essential factor for business success

- The development of sensitivity to the protection of personal data

- Personalising customer relations: a marketer’s dream

- 4 points of action to gain your customers’ trust

- Conclusions

Some figures about the effects of personalisation

Algorithmic recommendations are now being used on a massive scale to enable the personalisation of customer interactions at a large scale. It has invested all sectors of activity in B2C, and its efficiency is well established. Here are some figures:

- Netflix has nearly 160 million subscribers in 190 countries. Algorithmic recommendations generate 80% of the consumption on the platform.

- Netflix makes 1 billion Euros of profit each year thanks to algorithmic personalisation. The personalisation reduces the churn (source: Techjury).

- 35% of Amazon purchases are based on algorithmic recommendations: “customers who have bought this have also bought this” (source: McKinsey).

- Alibaba has increased its sales by 20% by personalising the landing pages of its website (source: Alizila).

- 500 hours of content are uploaded every minute on YouTube and online video consumption has doubled among children in 4 years. At the same time, 70% of the consumption on YouTube comes from the recommendation algorithm (sources: CNet, Tubefilter, Washington Post)

- 100% of the results offered by Google are personalised. It is the most widely used personalising algorithm in the world.

The respect of privacy

Already in 2017, a survey revealed that only 10% of American citizens believed they had complete control over their data. Moreover, only 25% of them trusted companies to protect their data. The repeated scandals (e.g. Cambridge Analytica) have increased this awareness, even more since the entry into force of the GDPR.

We are also witnessing the multiplication of legislation or attempts at regulation on related subjects: filter bubbles, transparency of algorithms, digital addictions, and so on. We cannot say that our elected representatives are not working. Respect for privacy seems to be motivating them.

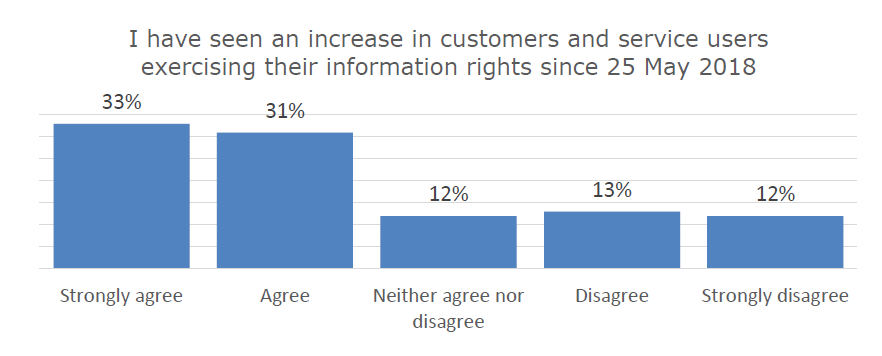

64% of English firms noted an increase in complaints after the entry into force of the GDPR.

We can, therefore, expect that this heightened sensitivity on all sides will reflect in requests for the protection of personal data received by companies. A survey carried out in March 2019 by the ICO (United Kingdom supervisory authority) sheds light on the subject. Since the entry into force of the GDPR, 64% of English companies have the impression that consumers complain more often to protect their data (source: March 2019 survey, 2018/2019 ICO annual report).

The question remains, however, whether this impression is in line with reality and, above all, whether this impression applies to the other countries of the European Community. A study conducted by IntoTheMinds with the data protection authorities of the European Community collected accurate data for 17 countries on the effect of the GDPR. As a result, the average increase in the number of complaints received by the supervisory authorities of the 17 countries studied between 2017 and 2018 is +109% or 2037 claims.

Detailed results are available below.

| Country | Increase in the number of complaints relating to personal data after the entry into force of the GDPR (in %, year 2018 compared to 2017, from 1 January to 31 December of each year) | Increase in the number of complaints relating to personal data after the entry into force of the GDPR (in number of complaints, year 2018 compared to 2017, from 1 January to 31 December of each year) |

| Iceland | -3% | -3 |

| Croatia | +257% | +3527 |

| Slovakia | +54% | +32 |

| Latvia | +39% | +346 |

| Cyprus | +45% | +154 |

| United Kingdom | +98% | +20642 |

| Norway | +56% | +123 |

| Denmark | +149% | +3302 |

| Lithuania | +79% | +379 |

| Romania | +36% | +1279 |

| Luxembourg | +125% | +250 |

| Sweden | +480% | +1180 |

| Ireland | +45% | +1240 |

| Liechtenstein | +270% | +1465 |

| Hungary | +22% | +177 |

| Slovenia | +43% | +242 |

| Bulgaria | +65% | +308 |

We can see that the figures reflect very different realities. Some countries are seeing significant increases. But apart from the United Kingdom, which has high volumes of complaints (which explains the responses of English DPOs to the survey mentioned by the ICO), in real terms, the amounts of claims remain relatively modest. When compared to the population of the countries concerned, they can even be considered insignificant. On average, in 2018, fewer than 5 out of 10,000 people complained about their data.

less than 5 out of 10,000 people filed a complaint about their personal data in 2018

It is, therefore, necessary to put into perspective what I said at the beginning of this paragraph. Despite all the efforts made to make the crowds aware of the importance of their data, it seems that the message is still tricky to get across. The digital traces left during our digital activities are primarily neglected, their importance misunderstood, and citizens are therefore not interested in them. If the GPDR has made “privacy-by-desig” popular, I like to say that this regulation was designed with “flaw-by-design”. Its design flaw is the principle of consent, an Achilles’ heel to which we will return later.

A personalised relationship, a marketer’s dream

The development of personalised relationships with their customers is the dream of any company. Indeed, a quality, personalised link helps to build customer loyalty. And as we all know; increased loyalty leads to improved profitability. Maintaining personalised relationships has a price. This price is affordable when you have few customers (in B2B, for example). But how to personalise on a large scale? Large-scale algorithmic personalisation has been made possible by algorithms and by the collection of customer data in unprecedented quantities. The democratisation of data storage and computing capabilities (thanks to Amazon) therefore promises to personalise each customer relationship at a lower cost.

In 2015, 39.7% of Americans said they did not want to personalise their data.

Here again, however, there is a contradiction. In an American study published in 2015, 39.7% of respondents said they did not want personalisation, and only 6.2% asked for “extreme” customisation. Is it a real desire of consumers to keep their free will in their choices? Or is it merely a lack of knowledge of the mechanisms already at work (but invisible) in all aspects of their daily lives? The second option likely is the most likely. Consumers are not aware of the use of personalisation algorithms on all the sites they visit, in all the applications they use.

So, there is a problem that we need to solve to gain consumer confidence, which brings us to the fourth and final part of this article.

How to build customer confidence using interactions based on their data

Data security is a fundamental element of trust. So, I’m not even going to talk about it because it’s a prerequisite. Make sure you meet the ISO 27001 standard, and you should generally be well equipped to focus on the following actions.

Action 1: Educate, simply

In 2018 I updated a study on privacy policies, and carried out another one on the 20 most used websites and applications. It took between 30 minutes (Pinterest) and 51 minutes (WhatsApp) to read the privacy policies. At a rate of 120 valid words per minute, understanding the legal texts of these 20 sites took more than 11 hours. 56% of Internet users accept the terms of use of the sites without reading them (source). Consent” is the Achilles’ heel of the GDPR; companies can, therefore, do whatever they want with your data since you have consented to it. So, we can reasonably consider that it is necessary to simplify the Internet user’s task and educate him. By doing so, you will be able to counter your competitors and gain the respect of your users. At the same time, they will develop a greater awareness of the protection of their data, which can only be of benefit to you.

It takes between 30 minutes (Pinterest) and 51 minutes (WhatsApp) to read the privacy policies of the applications we use on a daily basis

In practice, how can this be done? I want to give you an example of RTBF, for which I worked from 2015 to 2019. In particular, I initiated a video filmed with the Belgian presenter Adrien Devyver that explains in a few simple sequences what RTBF does with its users’ data. It is a fun and simple way to get a sometimes-complicated message across.

Action 2: Give back control to the users

Personalisation algorithms are essentially opaque mechanisms. Allowing the customer to manipulate them helps to restore confidence in their functioning. Since the GDPR came into effect, there have been several initiatives that are moving in the right direction. I mentioned the efforts of YouTube, which now allows you to delete or suspend the consumption history. Other initiatives exist, such as the one developed from the Spotify interface by Nava Tintarev and her colleagues, and presented at the RecSys 2018 conference.

Action 3: Stop using other people’s data

For years I have been calling for the construction of “data sovereignty“. Companies must become autonomous in the management of their data and no longer depend on third parties for profiling their customers or users. This principle has been applied by several of our customers (including RTBF with the launch of its unique login in 2016). Looking back, everyone welcomes this choice. Data control is better, and leakage risks contained. Most importantly, the company maintains a 360-degree view of customer data and can respond with certainty to all customer requests. A logical consequence of these choices is that customer data cannot be sold. If your strategy is to rely only on your 1st-party data, it is out of the question to resell it. This would be inconsistent with the promise made to the user and of course, it would jeopardise the trust they have placed in you.

Action 4: Build trust little by little

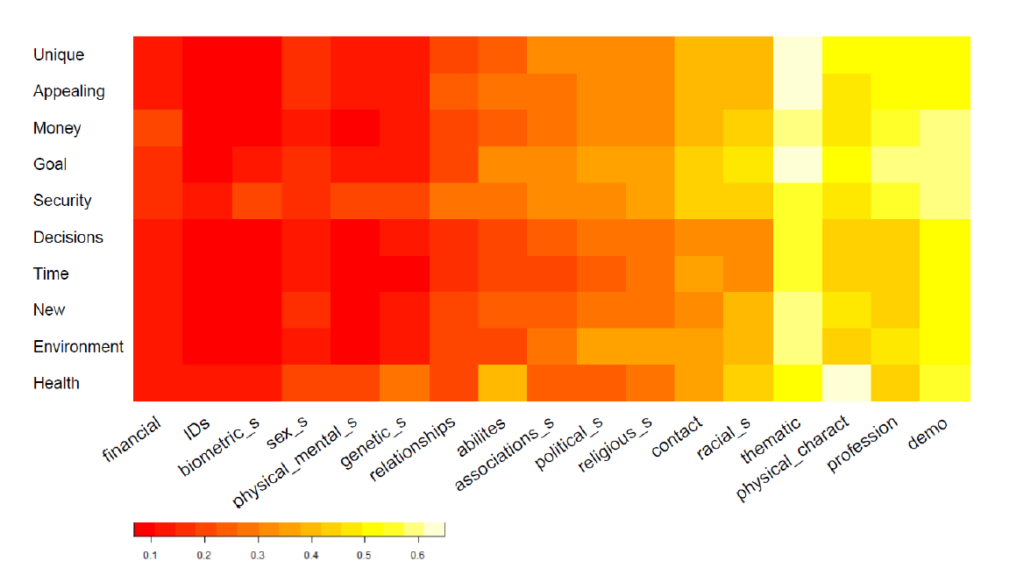

It is not possible to collect all the data necessary for fine personalisation all at once. First, the GDPR prevents you from doing so (since there must be a specific purpose for collecting the data). Then, collecting data without justification would again undermine customer confidence in your company. What is the solution? We have already discussed this in another article. You must identify the contexts and situations that encourage the user to tell you more about him/herself. The study by Wadle et al. (2019) analyses 17 data categories and ten different contexts. While some types of data (interests or socio-demographics) are easily shared in any setting, others are only shared in specific situations. This is the case for biometric data, for example, where the probability of sharing only increases in the context of improved security.

In conclusion

In this article, we have, therefore seen that large-scale personalisation requires the use of recommendation algorithms. The latter can only work with customer data. However, customers are increasingly suspicious of the sharing of personal data, even if this mistrust is not reflected in complaints to privacy authorities. A strategy must therefore be put in place to build the necessary trust for the transmission of personal data.

4 points of action have been proposed:

- educate entertainingly on the functioning of the algorithm

- provide the user with means of control over the personalisation process

- use only the data that you have collected yourself (1st part data)

- define specific, strategic contexts for the collection of additional data

Do you have any questions, comments or requests? Do not hesitate to contact us. We are here to help you.

Posted in Big data, Marketing.