The RecSys 2020 conference took place last week in a new form because of the pandemic. I should have been in Rio de Janeiro with the rest of the scientific community to talk about recommendation systems like every year. Covid-19 changed our plans, and the conference was held online. Despite this move into the virtual sphere, the time difference and the lack of physical meetings, I was still able to take advantage of it. In this article, I come back to the opening Keynote that was given by Filippo Menczer from Indiana University. Entitled “4 reasons why social media make us vulnerable to manipulation”, it allowed us to take stock of a complex subject and to expose the real role of social networks in the formation of echo chambers.

5 ideas to remember

- those most vulnerable to manipulation are not exposed to those trying to establish the truth (fact-checking)

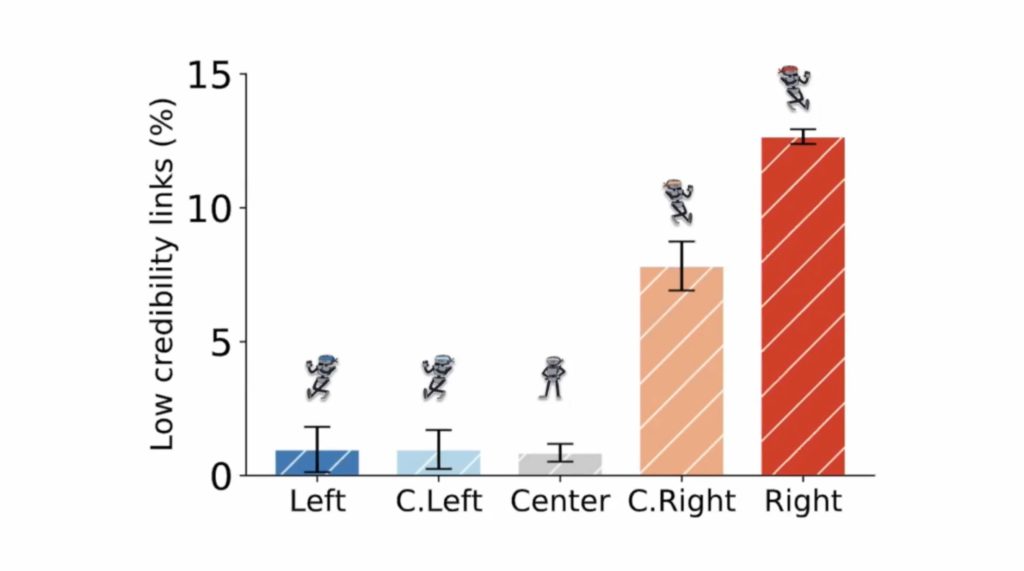

- Conservatives are the most likely to share false information.

- the virality of incorrect and accurate information follows almost the same dynamics

- Twitter tends to bring the opinions of the central Democrats closer together, but not those of the Republicans.

- when bots infiltrate 1% of a network, they can control it and cause the quality of the information broadcast to drop.

Idea 1: Echo chambers

I have already devoted many articles on this blog to echo chambers and filter bubbles.Filippo’s first point referred to his own experience with echo chambers following an “attack” on his laboratory through a disinformation campaign.

The biases are within us, and social media is a catalyst that accelerates the formation of echo chambers.

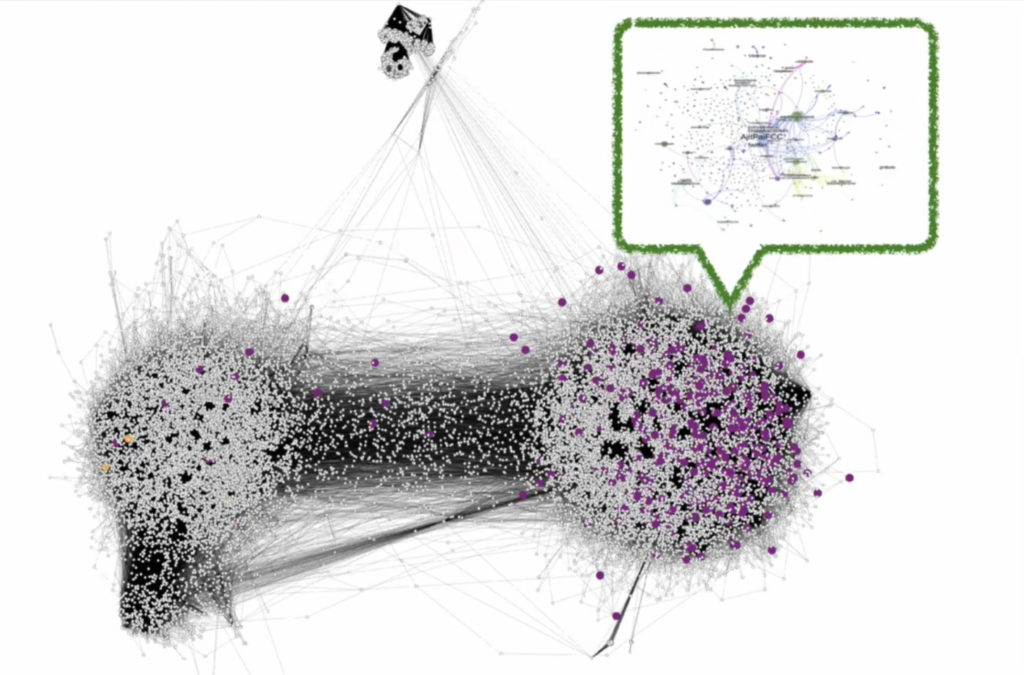

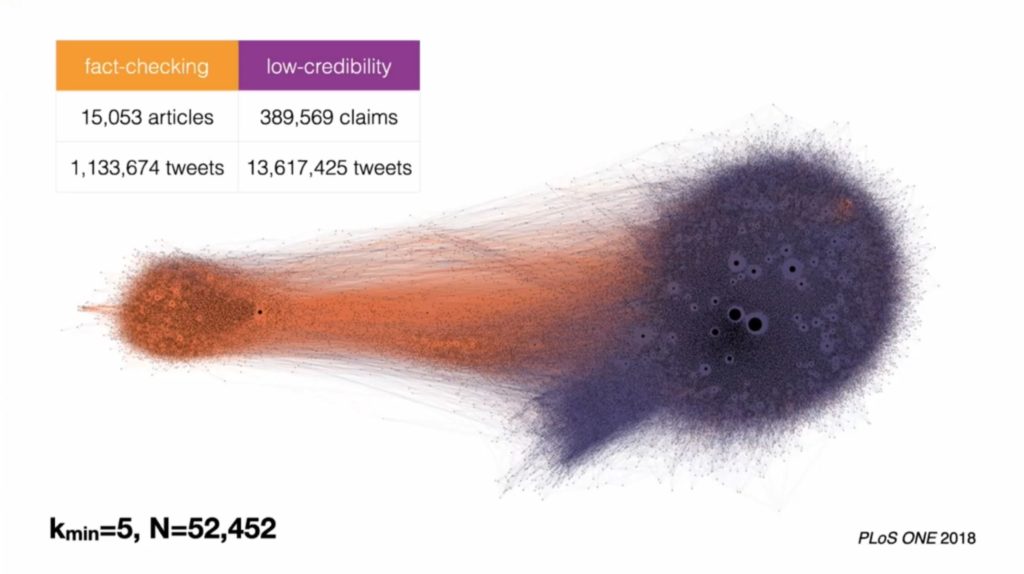

The study of these attacks showed that they originated from a group of people/bots from the conservative (Republican) wing. This is illustrated in the image below, where you can see that the disinformation network (circled in green) is entirely embedded in the Twitter accounts located on the right side of the political chessboard. Two yellow dots (fact-checkers) on the left remain inaudible. In other words, people who are vulnerable to disinformation attempts do not have the opportunity to be exposed to the real facts that would allow them to change their opinion.

Echo chambers are not so much the result of recommendation algorithms (another argument against filter bubbles) but rather the result of the behaviour of individuals themselves. In a very enlightening simulation, the Filippo team showed how echo chambers are formed. It is the action “unfollow” (stop following someone) that gradually leads to the formation of echo chambers. And as Filippo points out, recommendation systems that aim to counteract echo chambers only succeed at best in slowing down their formation. The biases are in us, and social media is a catalyst that accelerates the formation of echo chambers. If you want to go deeper into the subject, I suggest you read this article.

You can play with this simulation yourself via Filippo’s laboratory page.

In short, these echo chambers are the result of biases inherent to our beliefs, especially political ones. What will not fail to challenge is the scientific proof that is brought of the higher probability of conservatives and ultra-conservatives to share what is modestly called “links of low credibility” (in other words, fake news).

Tip 2: Too much information

It will not have escaped anyone’s notice that we live in a society where information has become ubiquitous. The cognitive burden has never been more significant in the history of humanity. Referral systems are, therefore, potentially useful in finding the required information. The problem is that in the clutter of information that comes our way every day, there is good and bad. The question, therefore, arises as to whether we can sort it out according to the quality of the information. The answer is no.

The virality of true and false information follows almost the same dynamic. The real news is only marginally more popular than “fake news”. This is worrying.

To understand this dynamic, it is essential to know that the virality of a post depends in fact on the structure of the social network. A social network that resembles a “human” network allows for the best performance. For this purpose, the presence of “hubs” is essential (those relay persons in the system who are connected to many people).

Idea 3: the platform’s bias

The conservatives regularly accuse Twitter of biasing the results and hiding certain information in favour of the Democrats.

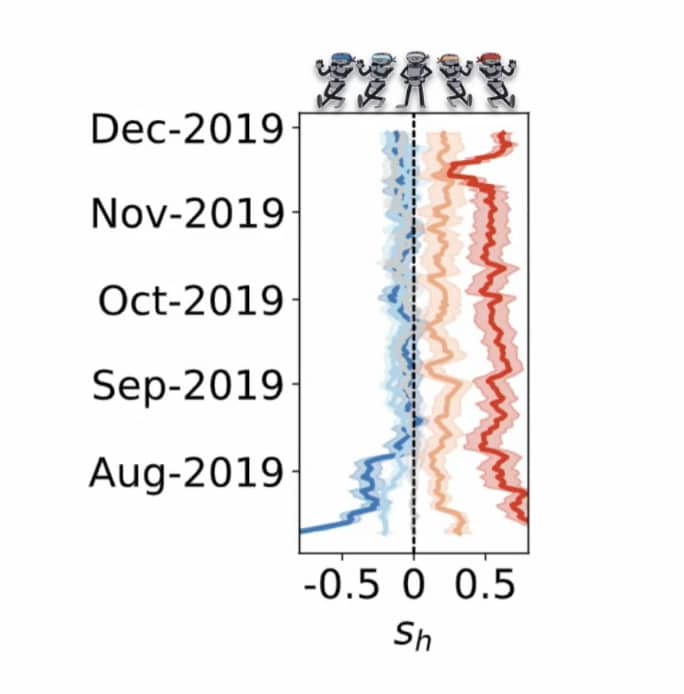

What Filippo Menczer has shown is that Twitter tends to “normalise” the opinions of the Democrats. The graph below shows that the most left-wing views (in blue and in August 2019) are moving closer to the centre as time goes by. This is encouraging because it is necessary to bring opposite currents closer together to restore communication and avoid echo chambers.

The problem is that the same dynamic does not apply to the conservatives (in red). Their opinion remains, despite the passage of time, very much rooted on the right.

The algorithm has nothing to do with it. Jérôme Fourquet talks in his book about archipelagos, and this is precisely what we observe here. A conservative archipelago that has cut off all contact with people who have different opinions, and which maintains its conservatism in a vacuum.

Idea 4: Manipulating social networks through bots

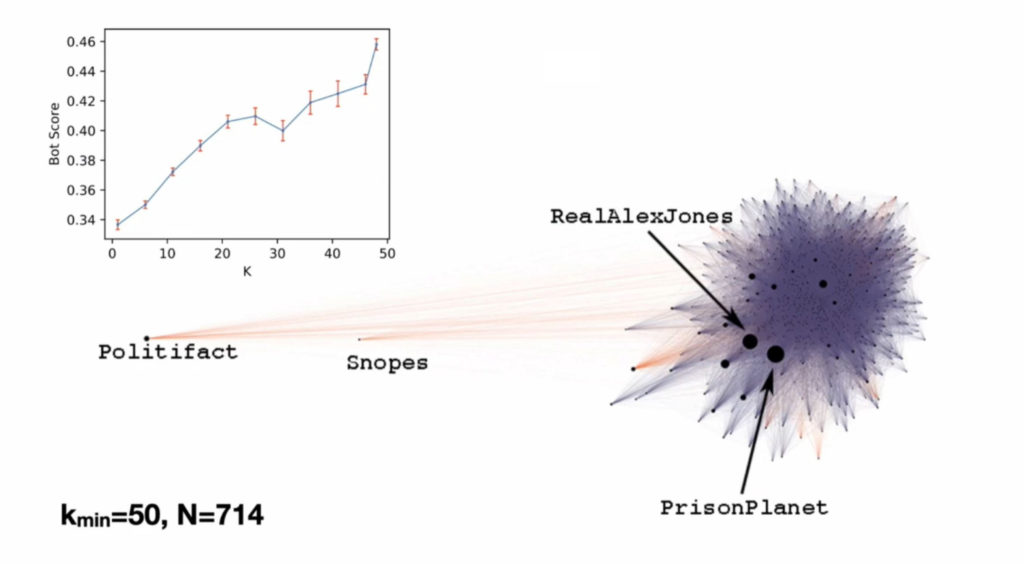

Filippo Menczer was the first to observe bot activity on Twitter in 2010. The illustration below is, in fact, the first testimony of the action of 2 bots that automatically retweeted their messages as in an infernal game of ping-pong. The thickness of the line corresponds to the number of retweets (several thousand).

The visualisation below is from Hoaxy, another application from Filippo Menczer’s laboratory. This application allows you to visualise the speed at which fakes news and real information are broadcast. The use of data from user activity logically shows that the accounts that share fake news are entirely isolated from those that share accurate information.

If we go into the heart of the “fake news” nebula on the right, we discover that bots make the majority of fake news broadcasts. The accounts that are closest to the “heart” of this network are more likely to be bots. The hacking of false information is a technique used by bots to fill a system and make false information appear on a human’s Twitter feed from which he hopes for a retweet.

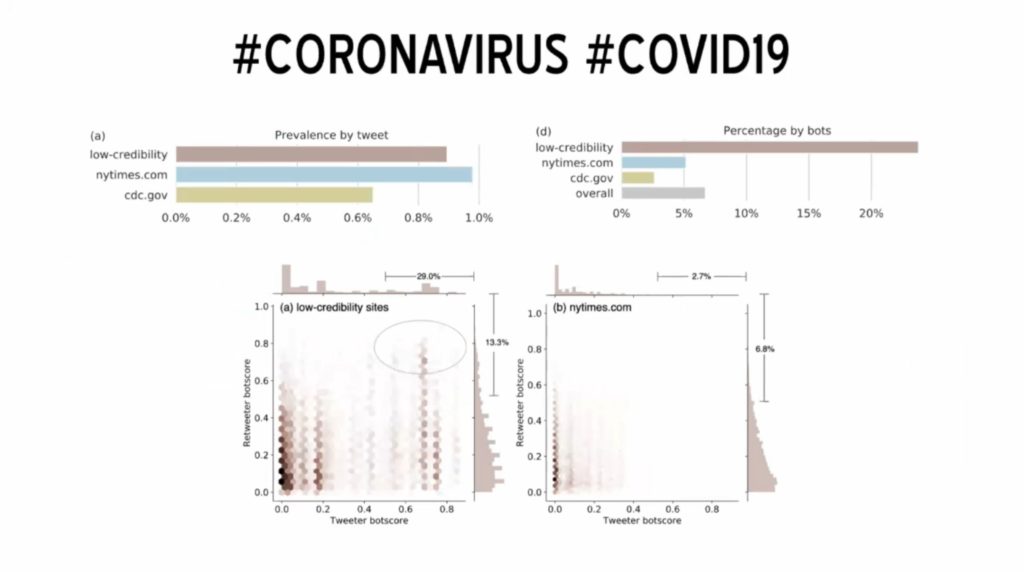

Humans make most of the retweets. But they retweet both real and fake news. This is the effect of this hacking by bots, some of them being able to broadcast a link to a “fake news” several thousand times. The consequence is that humans will publish both types of information, as shown in the example below about the COVID. As you can see on the graph in the upper left corner, the dissemination of information with little credibility is at the same level as that of information from legitimate sources (NY Times or cdc.gov).

Other analyses show that when bots infiltrate 1% of the network, they can control it and cause a drop in the quality of the information broadcast.

Posted in Research.