Algorithms have invaded our lives. Despite this, they sometimes provoke negative reactions from users. An article published in September 2022 offers a very interesting analysis framework for those interested in this phenomenon. In this article, I analyze the 4 reasons that explain the negative reactions against algorithms and illustrate them with many examples.

If you only have 30 seconds

- algorithms sometimes generate violent reactions of rage from users

- There are 4 reasons why user frustration turns into verbal abuse:

- Lack of knowledge of how the algorithm works and misunderstanding of the results

- algorithmic error

- user’s interests as opposed to the algorithm’s

- lack of control over the algorithm

- solutions do exist

- rethink the design of algorithms to maximize user satisfaction

- make the algorithms more transparent and explain how they work

- give the possibility to adjust the functioning of the algorithm

The phenomenon of rage against algorithms materializes in different ways. The authors of the research cite the outbreak of the hashtag #RIPtwitter when the platform decided to use a recommendation algorithm rather than displaying messages in anti-chronological order. At the time, as you can see on the graph below, users reacted violently (source: Trendsmap). We can also see that the hashtag #RIPtwitter reappears from time to time during peaks of rage against the platform.

The phenomenon also manifested on Instagram with the hashtag #RIPinstagram in 2017 for the same reason. The mechanism of algorithmic recommendations thus seems to crystallize negative feelings.

Yet recommendation algorithms are the basis of the success of many companies (Netflix, Google, Tik Tok) and contribute to customer satisfaction. It is, therefore, very interesting to wonder about the reasons that drive users to have these negative reactions.

Explanation 1: Lack of knowledge

Explanation 1: Lack of knowledge

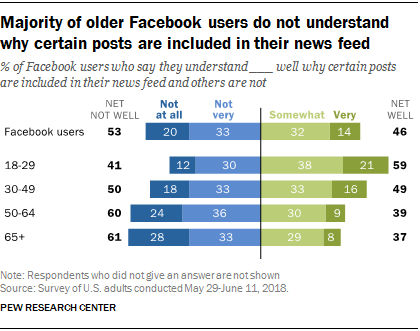

The lack of understanding of how algorithms work is a fact. In research published in 2018, the Pew Research Center pointed out that 53% of Facebook users did not understand how the algorithm feeding their “feed” worked. This percentage reached 60% among 50-64-year-olds and 61% among those over 65.

Understanding how a recommendation algorithm works is still a complex subject reserved for a few insiders. However, increasing users’ understanding of an algorithm’s work has benefits. As this experiment proves, understanding how the algorithm works significantly increases the user’s level of trust and satisfaction.

Explanation 2: Algorithmic errors and biases

Algorithms sometimes create situations of extreme violence against users. Algorithmic errors can have serious consequences, as in the case of the man arrested in Michigan after an erroneous algorithmic identification. Let’s also mention the example of tenants who were rejected after an algorithmic verification of their profile or the ruin of Dutch citizens following the misuse of an algorithm by the tax authorities.

The algorithms used in the education sector are also often pointed out. In France, the selection algorithm for university choice was manipulated, creating inequalities between candidates at the time of their academic orientation.

Finally, some communities may feel algorithmic discrimination when they feel they are unfairly targeted for their identity. This happened on Tik Tok, where LGBTQIA+ creators felt that the algorithm made their content invisible. An audit revealed that these criticisms were true. Conversely, an algorithm can also overexpose content and make it accessible to too large an audience. In doing so, content creators can be pressured by some users.

As you can see, the risks linked to using recommendation algorithms are numerous. The balance between added value for the user and risks is difficult to find.

Explanation 3: The interests of the user versus the interests of the algorithm

The example of Twitter

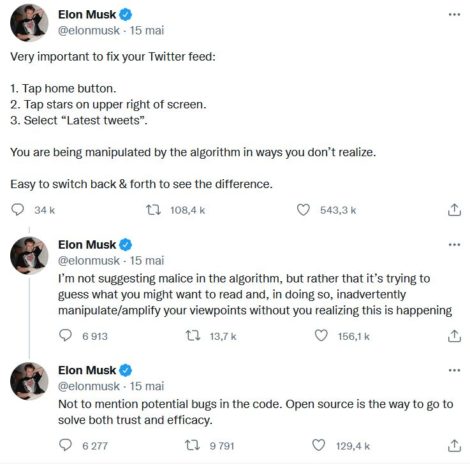

Twitter’s algorithm would amplify beliefs, and de facto polarize viewpoints. Users of a platform sometimes feel “manipulated” by the algorithm. A series of Tweets from Elon Musk gives the full measure of this. In these 3 tweets published on May 15, 2022, he accuses the algorithm of “manipulating [users] in a way that [they] don’t realize.” In his second tweet, he mentions the filter bubble theorized by Eli Pariser.

Dans ce cas, les intérêts de l’algorithme et des utilisateurs ne seraient pas alignés. Cette thèse est contestable car, on l’a déjà vu, les bulles de filtres restent une possibilité théorique.

The example of Spotify

Spotify is another example of this opposition between the user’s interests and the algorithm. First of all, let’s remember that Spotify is a platform that proposes audio content to users and remunerates creators based on the number of listens. Users want to listen to the content they like. The latter want to be listened to (and therefore recommended) as much as possible. They must therefore find a subtle balance between user satisfaction and creator satisfaction.

Spotify’s algorithm is therefore faced with a dilemma. Should it recommend content with the highest probability of being liked (usually produced by major labels) at the expense of more confidential content produced by independent labels? Unfortunately, there is no perfect solution to this problem. Because users are privileged (they are the ones who pay), independent creators may feel frustrated with Spotify’s algorithm.

Explanation 4: Lack of control

The problem of algorithm control is a recurring complaint among users and a major source of dissatisfaction. Some of the examples we gave above can be linked to it.

Any change in the “algorithmic recipe” inevitably leads to dissatisfaction. We saw it with Elon Musk’s tweets. More recently, Instagram has been heavily criticized for its tendency to resemble TikTok. This has included an excessive recommendation of “reel” content. Influencers started an informal campaign to demand the changes be reversed.

The lack of control is also felt by the ability of the algorithm to drill down on your secrets without you being able to object. This is the case on TikTok, where the algorithm’s accuracy makes users feel like they are being spied on.

How can we restore confidence in algorithms?

By their very nature, algorithms remain objects that are difficult to understand by the average person. Some of them are so complex that they pose problems of understanding for their designers. Under these conditions, how to avoid the extreme reactions that some users may have.

There are 3 ways to think about it:

1/ Putting people at the center of recommendation system design

The GDPR introduced the idea of “privacy-by-design.” Algorithm designers should do their satisfaction-by-design. Most algorithms are indeed designed with the company’s interests in mind. This results in behaviors that can harm the user (see this reflection on the end of recommendation algorithms).

2/ Make the algorithms more transparent

Acceptance of algorithmic results requires a better understanding of how they are produced. Algorithmic errors will be better accepted if users know why they occur. Satisfaction will be higher.

3/ Increase the user’s control over the algorithms

The last axis of reflection consists in giving back a little control to users to personalize their feeds. There are more and more initiatives, such as Linkedin’s initiative to make certain content or authors disappear from the feed. Users will try to manipulate the algorithm if you don’t give them a little control. For example, there is research on how Linkedin’s algorithm works.

Posted in Research.